Partial reinforcement, unlike continuous reinforcement, is only reinforced at certain intervals or ratio of time, instead of reinforcing the behavior every single time. This form of scheduling reinforcement after certain number of correct responses or certain interval of time is also termed as intermittent reinforcement.

This type of reinforcement is regarded more powerful in maintaining or shaping behavior. Also, behaviors acquired from this form of scheduling have been found to be more resilient to extinction.

The definition of partial reinforcement as inconsistent or random reinforcement of responses could complicate the matter in a learner’s point of view. Researchers have classified four basic schedules of partial reinforcement that attempts to cover various kinds of interval and ratios between reinforcements.

1. Fixed-Interval Schedule

In a fixed interval schedule (FI), say one minute, must elapse between the previous and subsequent times that reinforcement is made available for correct responses. The number of responses is irrelevant throughout the time period. This causes change in the rate of behavior.

The response rate is usually slower immediately after a reinforcement but increase steadily as the time for next reinforcement comes closer.

Example: Someone getting paid hourly, regardless of the amount of their work.

2. Variable-Interval Schedule

In a variable interval schedule (VI), varying amounts of time are allowed to elapse between making reinforcement available. Reinforcement is contingent on the passage of time but the interval varies in random order. Each interval might vary from, say, one to five minutes, or from two to four minutes. The subject is unable to discover when reinforce would come; hence, the rate of responses is relatively steady.

Example: A fisherman waits by the shore for certain amount of time, and he most likely catches same number of fishes every day, but the interval between catches isn’t same. If fish is considered reinforcement, then it is inconsistent.

3. Fixed-Ratio Schedule

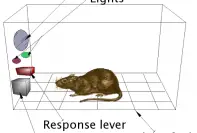

In a fixed ratio schedule (FR), reinforcement is provided after a fixed number of correct responses have been made. Reinforcement is determined by the number of correct responses. For example, let’s consider that the hungry rat in the Skinner box has to press the lever five times before a food pellet appears. Hence, reinforcement follows every fifth response. The ratio is same for reinforcement to be presented.

Example: A saleswoman gets incentive after each pair of shoes she sells. The quality is irrelevant as she gets paid higher for higher number of shoes sold. Quantity of output is maximized in fixed ratio schedule.

4. Valuable-Ratio Schedule

In a valuable ratio schedule (VR), reinforcement is provided after a variable number of correct responses have been made. In a 10:1 variable ratio schedule, the mean number of correct responses that would have to be made before a subsequent correct response would be reinforced is 10, but the ratio of correct responses to reinforcement might be allowed to vary from , say , 1:1 to 20:1 on a random basis. The subject usually does not know when a reward may come. As a result, the response comes at high, steady rate.

Example: A practical example of variable ratio schedule is how a person keeps on checking his Facebook post counting the number of likes from time to time.

Critical Evaluation

Different schedules have different advantages. Ratio schedules have been known to elicit higher rates of responses than interval schedules because of their predictability.

For Instance, consider a factory worker getting paid per item he manufactures. This would motivate the worker to manufacture more.

Variable schedules are less predictable, so they tend to resist extinction and the continuation of behavior is self-encouraged. Gambling and fishing are regarded among the classic examples of variable schedules. Despite their unsuccessful feedback, both of them are hopeful that one more pull on the slot machine, or one more hour of patience will change their luck.

Because of the fact that partial reinforcement makes behavior resilient to extinction, it is often switched – to having taught a new behavior using Continuous Reinforcement Schedule.